13th Technical Meeting on Plasma Control Systems, Data Management and Remote Experiments in Fusion Research

Note: The meeting will take place virtually. Information on remote participation will be sent to all in due time.

Objectives

The event aims to provide a forum to discuss new developments in the areas of plasma control systems, data management including data acquisition and analysis, and remote experiments in fusion research.

Target Audience

The event aims to bring together junior and senior scientific fusion project leaders, plasma physicists, including theoreticians and experimentalists, and experts in the field of plasma control systems, data management including data acquisition and analysis, and remote experiments in fusion research.

-

-

1

OpenSpeakers: John Waterhouse (UKAEA), Matteo Barbarino (International Atomic Energy Agency)

-

Machine Control 1Convener: Adriano Luchetta (Consorzio RFX & Consiglio Nazionale delle Ricerche)

-

2

Challenges for application of IEC61508 to systems for investment protection containing FPGA Off-the-Shelf components: the ITER Interlock System Fast Architecture use case

The ITER Interlock Control System (ICS) requires the application of the IEC61508 standard for all mission-critical (known as investment protection) control functions. Such functions at nuclear fusion facilities present a unique challenge where events from integrated physics processes need to be detected and distributed to actuators with hard real-time constraints in the order of single-digit milliseconds - sometimes microseconds.

Systems that can achieve these kinds of requirements are often bespoke FPGA-based solutions, which are a well-known challenge to IEC61508 processes. However, to minimize the variety of components and simplify the procurement process for an international supplier-base, ITER decided to standardize the use of off-the-shelf devices. This is where a third challenge arises, to provide the required level of assurance that an COTS device is of good quality, fit-for-purpose and can be integrated adequately into an investment protection control loop with the necessary level of systematic capability over the development process.

The COTS devices chosen by ITER for the realisation of hard real-time interlock functions, require the use of a high-level language, and the associated integrated development tools to develop the FPGA functionality. This supposes a fourth challenge, as IEC61508 processes are still oriented to Hardware Description Language-based developments rather than high-level languages, such as, OpenCL, HLS, Mathworks-Simulink or LabVIEW-FPGA being increasingly used every day.

This paper explores the method ITER use to meet these four challenges with reference to a case-study system architecture with fast, hard real-time requirements. The paper also presents successes and limitations in attempting to apply rigor throughout the system realization process with COTS devices and high-level languages.Speaker: Damien Karkinsky (ITER Organization) -

3

Study on White Rabbit based sub-nanosecond precision timing distribution system for fusion related experiments

High-speed sampling measurements with more than a giga-sample per second have rapidly become popular in fusion plasma experiments, where the phase delay of the timing signals, such as triggers and clocks, becomes relatively large and thus some delay compensation mechanism would be indispensable for the timing synchronization.

White Rabbit (WR) is the high-precision network time synchronization technology that has been developed and improved in the field of large accelerators physics. It is based on IEEE1588-2008 Precision Time Protocol version 2 (PTP v2) which is now widely used in many fields and industries.

While PTP v2 is capable of synchronizing to International Atomic Time (TAI) with sub-microsecond accuracy, WR can synchronize each Ethernet connected node with sub-nanosecond accuracy.

As the design specifications and the related information of WR are publicly available under the open hardware project, it is easily applicable to other experimental plants comparing with other industrial high-precision synchronization methods.

As a result of some technical surveys and functional verifications, it has been confirmed that the WR technology could be applied to the measurement and control system of fusion related experimental devices if some deficient functionalities such as divided clocks and group operations of multiple nodes would be additionally implemented.

-

4

As built design of the control systems of the ITER full-size beam source SPIDER in the Neutral Beam Tests Facility

SPIDER - the ITER full-size beam source built at the Neutral Beam Test Facility (NBTF) in Padova, Italy – has been in operation since June 2018. SPIDER’s mission is to optimize the operation of the beam source so that to reuse the SPIDER experience on the full-size prototype of the ITER Neutral Beam Injector, called MITICA, under advanced construction at the NBTF, and in the ITER heating neutral beam injectors.

The exploitation of SPIDER started with short, low-performance pulses lasting up to a few seconds and developed to obtain long pulses lasting up to 3000 seconds. Furthermore, the integration of plant and diagnostic systems has grown over time. The size of the amount of data collected and stored per pulse can provide a simple measure of evolution. In fact, it has gone from a few tens of Mbytes in the first campaign pulses to the current maximum value of over 150 Gbytes, most of which produced by infrared and visible cameras.

From the first operation onwards, the control systems have also evolved and consolidated, including components and functions, which were not initially foreseen or developed only in a preliminary form. This includes the progressive integration of plant and diagnostic systems and of protection and safety functions.

The paper initially focuses on the architecture of the SPIDER control systems that include CODAS, the system delivering conventional control and data acquisition and management, the central interlock system delivering plant protection, and the central safety system delivering people and environment safety. Since all systems have been developed following the guidelines of ITER for the implementation of control systems, the integrated SPIDER control, interlock and safety systems may provide an interesting example for the ITER plant system developers.

The paper then describes how the top-down definition and implementation of operating states and operational scenarios provide the framework for the integration of control, interlock and safety systems and the basic element for successful operation.

Finally, the paper reports on the lesson learned during these nearly three years of operation with particular attention to the progressive, continuous evolution and recommissioning of systems.Speaker: Mr Cesare Taliercio (Consorzio RFX and CNR-ISTP)

-

2

-

14:40

Break

-

Machine Control 2Convener: Bingjia Xiao (Institute of Plasma Physics, Chinese Academy of Sciences)

-

5

Event reconstruction using KSTAR FIS event counter in hot KSTAR plasma

Korea Superconducting Tokamak Advanced Research (KSTAR) Fast Interlock System (FIS) event counter assigns counter values to various events occurring during plasma discharge. It is one of the KSTAR FIS functions that make it possible to check the order of event occurrence. It is made using the operation clock of the FPGA in synchronization with the timing signal received from KSTAR Time Synchronization System (TSS). Each event includes time information at the time of occurrence, and by analyzing this, the context of the event can be grasped. The counter has a resolution of 10 microseconds and is made to include almost all events related to the KSTAR plasma discharge. It was confirmed that collecting events occurring during plasma discharge at high speed and recording the occurrence time is very useful for debugging of Plasma Control System (PCS) and understanding device operation status. This paper will be presented including implementation and operation results of the KSTAR FIS event counter.

Speaker: Mr Myungkyu Kim (Korea Institute of Fusion Energy) -

6

The final design of the ITER Interlock Discharge Loop Interface Boxes (DLIB) and its compliance with the IEC 61508 standard.

The Central Interlock System (CIS) is in charge of implementing the ITER Central Investment Protection Functions. A dedicated architecture based on hardwired loops will be responsible for the protection of the superconductive magnet system. These loops act in a transversal way connecting all systems directly involved in the protection of the magnets at the plant level. The base of the coordination between the hardwired loop and the different users is a common interface called DLIB (Discharge Loop Interface Box).

The IEC 61508 standard, which defines the ‘Functional Safety’ provisions for I&C systems, has been used as the guideline to define the lifecycle of the device, starting from the specification up to operation and maintenance of the Investment Protection Function performed by the DLIB.

The whole dependability of the DLIB has been improved and demonstrated though a detailed verification and validation process, including:

- Safety Integrity Level (SIL) analysis based on the FMEDA method

- Manufacturing tests to identify any issue related to the series production of the component.

- Early Stage Screening to identify latent defects.

- Qualification testing to identify any externally inducted defects.

- Accelerated life testing to emulate the end of life behavior of the DLIB.The paper provides a summary for the whole process from the design up to the final validation of the Discharge Loop Interface Boxes (DLIBs) that will be used to coordinate the Fast Energy Discharge protection for the ITER magnet protection.

Speaker: Ignacio Prieto Diaz (ITER Organization) -

7

Data management system for the plant monitoring data in JT-60SA

The data management system for the plant monitoring data has been developed for JT-60SA. The plant monitoring data are continuously acquired for 24 hours for the purpose of monitoring the condition of the hardware systems such as baking, cryogenic and vacuum exhausting systems of the JT-60SA tokamak. In the case of the previous device, JT-60, the plant monitoring data were not acquired into the common platform of the database system whereas those were accessible individually to each plant system only for a short period. Therefore, it was not adequate enough for monitoring.

For JT-60SA, the new database system has been constructed for integrating the control and management of the plant monitoring data of many hardware systems into one system. This database system provides the users with stable control and safe management of the data acquisition process of a set of all plant monitoring data without missing any data transaction. This system also provides an appropriate environment to compare easily the plant monitoring data of several hardware systems.

Most of the hardware systems acquire the plant monitoring data every one second, and these data are transferred every 15 minutes from the hardware system to the database. Each block data is associated with a unique serial number, which enables the administrator to confirm if some block data is successfully transferred in order. In case a certain block data is missing, the corresponding data will be inserted later into the appropriate location in the database according to the serial number.

The plant monitoring data is associated with its time base data at all times. For example, there are approximately 2000 kinds of data transferred from the superconducting coil system. However, only two time base data are acquired because basically the plant monitoring data of one hardware system are aligned in the same time base. Therefore, the time base data of the plant monitoring data are transferred in a separate line to prevent duplicate storage of the time base data.

The operation status and performance of the plant monitoring database system have been evaluated practically under the actual operation of JT-60SA. We have confirmed that our new database system is operated effectively by integrating the plant monitoring data of many hardware systems.

Speaker: Ms Riho Yamazaki (National Institutes for Quantum and Radiological Science and Technology) -

8

Design study of REC-XPOZ network for ITER Remote Experimentation Centre (REC)

As a sub-project of the Broader-Approach activity between Japan and EU, preparation of ITER Remote Experimentation Centre (REC) is ongoing in Rokkasho Fusion Institute of QST, Japan toward remote participation in the ITER plasma experiments. In this study, current proposals of REC system, including a segment to be connected with ITER via VPN for remote participation, are reported.

Collaboration between REC and the ITER CODAC as a part of cooperation arrangement between BA activity and ITER project is starting in terms of remote participation. Our REC is expected to connect to XPOZ-RP segment in IO by a secure channel. A dedicated layer-2 VPN (L2VPN) with broad bandwidth between IO and REC was established in 2020. In the REC side of the L2VPN, a special isolated network segment, hereafter referred as REC-XPOZ, will be prepared in order to secure the communication between IO and REC.

A host running CODAC client applications on the CODAC Core System will be securely connected in the REC-XPOZ and CODAC server applications hosted in the XPOZ segment in ITER will be tested remotely. A server for live monitoring of the ITER experiment and of plant status has been also prepared and will be connected to the REC-XPOZ as well. Live streaming data without time-consuming disk I/O will be received and visualized on REC video-wall as well as the other operator interface terminals, to be looked up by remote participants at the REC.

In the REC, it is planned that all data generated in ITER will be replicated and stored into Rokkasho. A server with SSD for this fast data transfer by using MMCFTP has been prepared in the REC-XPOZ as the data receiver. Fast data transfer with 8 Gbps throughput between ITER and REC was already demonstrated in 2016. Further demonstration will be planned as the physical network between IO and REC is upgraded.

In order to promote research activities based on the ITER remote experiment, access to the replicated ITER DB in REC will be provided for domestic researchers in secure and efficient way with sufficient analysis computing resources. These data access from researchers to the replicated ITER DB has to be strictly separated from the REC-XPOZ for the security of the IO-REC L2VPN. Design study of the REC-SAN (storage area network) is ongoing considering this security point of view. A possible network structure including REC-XPOZ, REC-SAN and data analysis resources for domestic researchers of the REC based on data replication via L2VPN connection will be discussed.Speaker: Dr Shinsuke Tokunaga (National Institutes for Quantum and Radiological Science and Technology (QST))

-

5

-

15:40

Break

-

Plasma Control 1Convener: Axel Winter (Max Planck Institut für Plasmaphysik)

-

9

Lessons learned from upgrades to the JET RF real-time control system.

The plasma control system of the Joint European Torus (JET) is distributed and heterogeneous. This modularity has advantages in separating concerns that span several engineering domains, but creates integration challenges. This paper examines these issues in relation to the JET RF real-time control system. It describes how the system software has evolved over decades to respond to project upgrades. These have varied in scale from embedded systems updates, through major RF plant changes and up to facility wide modifications such as the introduction of the ITER-like wall. We highlight lessons learned from having addressed these projects while maintaining reliable operations and conforming to ever stricter quality processes.

Speaker: Mr Alex Goodyear (UKAEA ) -

10

The architecture and test-bed of the T-15MD tokamak plasma control system

Currently, National Research Center "Kurchatov Institute" is working on the tokamak T-15 modernization. Plasma parameters (current, position, the shape of plasma cord, electron density, and energy content) on the T-15MD tokamak are controlled by an electromagnetic system, dynamic gas injection, and a complex of additional plasma heating systems (neutral beam injection, ion-cyclotron, low-hybrid resonance, and microwave).

The individual components of the Power Control System (PSCS) and Plasma Control System (PCS) are distributed over distances of up to 300 m, and their interaction must be coordinated and synchronized with an accuracy of tens of microseconds.

A key feature of the developed T-15MD PCS is its ability to rapidly design, test, and deploy real-time shot scenario algorithms with the distribution of computing power between subsystems.

The electromagnetic PCS architecture consists of two levels:

1. High application-specific level: model development and linear approximation, calculation of the experiment scenario, controllers design and experiment simulation (Matlab Simulink RT / Linux RT).

2. Process control level: real-time control of plasma parameters (National Instruments (NI) hardware running LabVIEW RT operating system and ported CS-PF regulator as dll from Simulink).

In the Hardware-in-the-Loop (HIL) simulation mode (Fig. 1) communication between the levels (1) and (2) is realized by the reflective memory (RFM) “star” topology network and the middleware S-function package within Simulink RT / Linux RT environment that performs the role of Middleware. The electromagnetic PCS Simulink model structure shows in Fig.2.

At the moment, the electromagnetic PCS shown in Fig. 3 is implemented. The total data transfer latency in the PCS control cycle does not exceed 1.1 ms, which fits into the required maximum latency of the 3.3 ms.

The proposed architecture will allow performing tests and configuration of the PCS before plasma shots, which increases the efficiency of the experiments while reducing costs. In the future, the plan is to use Simulink RT on PCS DAQ Server to perform real-time calculations of plasma equilibrium reconstruction in the magnetic control loop, implementing PCS data exchange in the RFM network. It is planned to develop infrastructure for simplified integration and testing of third-party control algorithms and plasma-physical codes. Plasma equilibrium reconstruction code deployment on a high-performance server integrated in real-time with the EMD and the PCS controllers in the operational configuration. Interoperability is provided by the Skiner PTP adapter (data transfer with the exact timestamp binding) and RFM. The achievements and advantages of PCS architecture:

1) Development of regulators, codes, and models in the Simulink and DINA environment:

- Currently, Linux OS used with function ported from Simulink;

2) Adding the HFC PS control system after the T-15MD tokamak physical start-up will be performed as a simple upgrade without changing the hardware architecture and software.

3) The use of RFM network and the implemented decomposition of the hardware kit allow to quickly and cost-effectively switch from operational configuration to HIL test-bed (Fig. 4) and expanding PCS functionality.Speaker: Eduard Khayrutdinov (NRC "Kurchatov Institute") -

11

Decision logic in ASDEX Upgrade real time control system

One of the key requirements for large tokamak operation is a reliable handling of off-normal states such as failures in some subsystems and their combination, not all necessarily known a priori. Handling these issues requires advanced and flexible algorithms for decision logic to ensure a reliable, while still scalable system.

Our work focuses on the tokamak ASDEX Upgrade. The ASDEX Upgrade control system DCS already applies diverse decision algorithms for achieving strategic, system-wide goals as well as for implementing defence in depth in individual control functions. Beyond a certain number of states and complexity the current algorithms become hardly maintainable and scalable. In this contribution, we therefore propose the use of Behaviour Trees (BT) as the backbone for the decision logic to cope with the complexity and the experimental character of the control system for tokamaks.

BT are widely and successfully established in robotics and the game industry for the design of complex behaviours in real time. They possess several advantages over traditional methods such as hierarchical finite state machines (HFSM). As BT essentially operate state-less, they avoid the need of defining consistent state transitions between the many nested and concurrent sub-states of a plasma control system. This characteristic endows the BT with a great flexibility, high modularity and ease to maintain and extend.

In our contribution, we will show the usage of the BT in two examples. Firstly, it demonstrates how a BT can be used to define the current experimental goal by selecting the corresponding segment in the ASDEX Upgrade pulse schedule. Secondly, it shows the real time selection of the most convenient diagnostic sources for the real time density evaluation in presence of multiple diagnostics failures and diverse plasma states appearing at AUG.

Speaker: Mr Igor Gómez Ortiz (Max Planck Institute for Plasma Physics) -

12

Startup studies of MT-1 Spherical Tokamak

Abstract:

MT-1 a small spherical Tokamak in Pakistan, is the modified version of GLAST-II (Glass Spherical Tokamak) wherein glass vacuum vessel has been replaced by metallic vessel. Its major and minor radii are 15 and 9 cm respectively. Various coil systems for generation of toroidal , poloidal magnetic fields and toroidal electric field are installed. Diagnostic systems like Rogowski coils, magnetic probes, flux loops, Langmuir probes, and emission spectroscopy are also installed on the device. The generation of the plasma current in Tokamak is mainly dependent on impurity free environment in the chamber and optimized application of magnetic and electric fields. For conditioning of chamber first of all electric tape heating is employed then microwave heating and helium glow discharge are used. In order to monitor vacuum conditions, optical emission spectroscopy and RGA (residual gas analyzer) are used. During initial experimentation for generation of plasma current, it was found that in addition to other error vertical fields, a strong field generated because of eddy current flowing in the chamber is main problem for discharge initiation. One method to compensate this error vertical field is to apply an equal vertical field in reverse direction externally. Experiments were conducted for plasma current generation with vertical field produced by different combinations of vertical field coils installed symmetrically around chamber. This scheme did not work because the applied vertical field is suppressed because of strong coupling of central solenoid and vertical field coils. In order to apply vertical field independently at start of pulse, mutual inductance of two systems was measured and based on it; decoupling coils were designed and installed. All these efforts resulted in successful generation of plasma current. All signals during experiments are recorded using indigenously developed data acquisition system.Speaker: Dr Muhammad Athar Naveed (PTPRI) -

13

Optimizing DCS Real Time framework interfaces for WEST

DCS (Discharge Control System) is the IPP C++ real-time framework for plasma control at ASDEX Upgrade. Since 2016, the 2011 version of DCS has been used routinely for WEST plasma control without any kind of major issue. However, some errors occur in the interfaces with the WEST CODAC Infrastructures. Although it is not a security issue (machine integrity and operator security are not at risk), the lack of reliability in the interfaces has had an important impact on operation time and machine availability. Moreover, technical collaboration was becoming hard because of the growing differences between codes.

After analysis, it appeared that the way DCS was originally adapted to fit the needs of WEST is too complex and different from the DCS way of working. Moreover, the specialized parts of the code which are exclusively used for WEST operation were not included in the evolution/maintenance process at IPP Garching and codes have inevitably diverged. To fix these problems, it was decided to review the specialization of DCS for WEST so that only standard DCS services (called “Application Processes”) are used. This way, it will be possible to use exactly the same version of DCS in both institutes and to specialize code only by parameters. Consequently, WEST will benefit immediately from all improvements made to DCS and WEST will be a practical test bench to further demonstrate DCS agility and reliability.

The paper describes the new architecture of the DCS WEST integration and, in the second part, the results we obtained. This paper will also stress on all the advantages of sharing code as well as a common practice of software development.

Speakers: Julian COLNEL, Mr Rémy NOUAILLETAS (CEA/IRFM Cadarache), Mr Sébastien DARCHE (CEA/IRFM Cadarache), Bernhard Sieglin (Max-Planck-Institut for Plasma Physics), Wolfgang Treutterer (Max-Planck-Institut für Plasmaphysik) -

14

Framework Design of the CFETR PCS Simulation Verification Platform

To support the CFETR PCS development and discharge scenario optimization, the PCS Simulation Verification Platform (PCS-VP) is designed and developed. The framework of PCS-VP is divided into three layers, as device layer, function layer, and presentation layer, which follows the layered and modular design principles. The device layer interacts with the operating system and provides hardware driver modules to support the hardware-in-the-loop simulation between the platform and the PCS or other device subsystems. The function layer provides interpreters and solvers for system simulation, as well as powerful model libraries and interfaces with third-party models. The presentation layer provides a visual modeling and simulation environment. The model library of PCS-VP provides great convenience for plasma system simulation modeling. It includes a mathematical library for mathematical modeling and basic calculations, a plasma simulation library customized with a variety of plasma controllers, actuators, and plasma response models, and some auxiliary modules such as signal publishing and subscription module, event injection and exception capture module. In addition, the PCS-VP supports customized modules. Users can write function modules in C language or construct models by Simulink. At present, the prototype of PCS-VP, including visual simulation environment and part of the model library, has been developed based on python. And the poloidal coil current control and power supply models of EAST were constructed using the platform, the closed-loop control test between these models had consistent results with that in MATLAB/Simulink, which verified the feasibility of the framework design.

Speaker: Heru Guo

-

9

-

1

-

-

Plasma Control 2Convener: Sang-hee Hahn (National Fusion Research Institute)

-

15

Preliminary Application of Hardware-in-the-loop Simulation Technology in EAST PCS Control Simulation

Abstract EAST PCS, a linux cluster configured with real-time data acquisition and data transmission hardware devices, executes a series of algorithms for plasma parameters control in real time. The control performance and reliability of PCS determines the operation safety of the device and the achievements of physics experiment objectives. In order to test the performance of whole control system under real working conditions, the hardware-in-the-loop (HIL) simulation technology is applied for EAST PCS control simulation, which has been widely used in the aerospace, automotive industry and new energy fields. The essence of HIL simulation is to connect EAST PCS with the digital tokamak models through the configured data input/output devices, which simulates the real operation mode for the control system. This research uses the time synchronization method of aligning the hardware time with the real physical time to build the simulation framework. The HIL simulation framework is mainly divided into two parts. One is the upper computer that deploys real-time tasks, the other is the lower computer that executes real-time tasks. The work of the upper computer is mainly divided into three parts, using Matlab to develop a fixed-step physical model, using Labview to develop real-time drive services for the lower computer, and using VeriStand to compile, deploy and monitor real-time tasks. The main job of the lower computer is to run real-time tasks and exchange real-time data with EAST PCS through the reflective memory card. In order to verify the framework, two models, simple coil current model and rigid plasma model, were built for the EAST application. The fixed-step model running in the lower computer and the DMA transmission performance of the reflective memory card were tested. Both the transmission performance of the reflective memory card and the solution performance of the model met the requirements of the control cycle, less than 100us. The coil current, plasma current and position control had the consistent results with that in experiment or Matlab/Simulink model in loop test. The HIL simulation provides a powerful validation tool for the development of control functions in EAST PCS.

Keywords:Hardware-in-the-loop simulation, Control simulation, VeriStand, PCS

Speaker: Mr Yanci Zhang -

16

Application of RTF Architecture in EAST Radiation Control Subsystem

The Experimental Advanced Superconducting Tokamak (EAST) was built to demonstrate high-power, long-pulse operations under fusion-relevant conditions, which has ITER-like fully superconductive coils and water-cooled tungsten (W) mono-block structure. In order to construct distributed real-time subsystems for control and verify the performance of real-time framework (RTF) of ITER, the radiation control subsystem independent of the plasma control system (PCS) was designed and implemented based on the ITER RTF. To calculate the radiation power for PCS feedback control during discharge, this subsystem needs to communicate with the Central Control System for discharge information, acquire diagnostic signals to calculate the radiation power and exchange data with PCS during each control cycle, and store data to Mdsplus for further analysis. Besides, a friendly Graphics User Interface (GUI) is also necessary to set parameters. Corresponding to the above requirements, four RTF function blocks are designed, namely: communication function block, data acquisition function block, radiation calculation function block, and data storage function block. The communication function block can realize the slow data communication with Central Control System through Socket or fast data transmission with PCS through reflective memory network (RFM). The acquisition function block acquires 64 channels of absolute extreme ultraviolet (AXUV) signals synchronously in 20KHz using D-TACQ196 digitizer. The radiation calculation function block calculates the radiation power using AXUV signals and plasma boundary data which is read from PCS through RFM. All data generated by the acquisition and calculation function blocks are segmental saved in real time to Mdsplus tree in the data storage function block. The development of such subsystem in RTF architecture has been completed with the GUI written in Python. The benchmark test using history data was carried out, and the radiation calculation result is consistent with the historical data,which verified the effectiveness of each function blocks and the availability of hardware devices. In the 2021 EAST operation campaign, the subsystem will be applied for radiation power calculation, which is the first attempt of RTF on EAST.

Speaker: Mr Junjie Huang (1.Institute of Plasma Physics, Chinese Academy of Sciences 2.University of Science and Technology of China) -

17

Overview of the TCV digital real time plasma control system and its applications

TCV has a flexible, digital, distributed control system for testing experimental control algorithms, acquiring data from hundreds of diagnostic channels and controlling all magnetic, heating and fueling actuators. We present the state of the system, focusing on the latest upgrades, and the key control capabilities enabled by the system. The control algorithm code is developed and maintained in MATLAB/Simulink and run-time code is generated automatically using code generation. The previously used practice of just-in-time code generation and compilation before every shot has been abandoned in favor of a more reliable and efficient method where the run-time code is able to load parameters and waveforms from plant databases. The ability to simulate the control code is guaranteed by an object-oriented simulation framework in MATLAB/Simulink that reads parameters and waveforms from the same databases w.r.t. the real-time environment. This approach still allows very rapid development and deployment cycles with new algorithms deployed on TCV usually within a few days from the completion of their testing in simulation. The control algorithm software is managed through a DevOps methodology with extensive unit and regression tests as well as Continuous Integration / Deployment practices.

The real-time environment has been completely replaced by the F4E MARTe2 framework, greatly improving standardization, modularity, maintainability and extensibility. The intrinsic data-driven application runtime buildup of the MARTe2 framework has naturally yet rigorously allowed the integration of the inter-shot tunable parameters and waveforms in the control code. The framework has also greatly enhanced interfaces between the real-time computers and the rest of TCV IT infrastructure, notably with its databases for shot configuration and control data acquisition.From the point of view of the hardware, the systems responsible for primary plasma controls (magnetic control and density control) have been upgraded with new ADC/DAC modules connected to two real time computers operable in parallel on the same discharge. This arrangement allows to use one control computer for the primary (released) main plasma controller while the second one can be used as a live test stand for plasma algorithms in state of testing or development. Also, a new EtherCAT real time industrial network has been laid down to operate distributed low I/O count subsystems boosting system flexibility at low additional cost and high speed of commissioning.

This overhauling process has already granted a number of experimental advances on the machine, the foremost ones being: SAMONE a comprehensive real-time plasma supervision, off-normal event handling and actuator management system, plasma event detectors based on neural networks, novel linear controllers for improved vertical control for the formation and stabilization of doublet. Finally a number of existing real-time codes have already been ported to this new approach allowing them to be run seamlessly on every TCV discharge in real-time; notably they comprise RT-LIUQE, the real-time magnetic equilibrium reconstruction of TCV, coupled with real-time transport calculations; RT-MHD, the comprehensive real-time MHD analysis algorithms set and real time divertor radiation front control with multispectral 2D imaging diagnostics (MANTIS). Other applications include runaway and profile control.

Speaker: Cristian GALPERTI (Ecole Polytechnique Fédérale de Lausanne (EPFL), Swiss Plasma Center (SPC), CH-1015 Lausanne, Switzerland) -

18

The Preliminary Design of New Plasma Control System based on real-time Linux cluster for HL-2M

HL-2M is a medium-size tokamak constructed by Southwestern Institute of Physics (SWIP) in China. The first plasma has been successfully obtained in 2020 by using a plasma control system (PCS) based on Labview RT. In order to get better plasma control performance, a new PCS based on the software framework of DIII-D PCS has been proposed.

There are two concerns about realizing the PCS. First, real-time performance of PCS needs to be guaranteed. Second, many interfaces should be adopted for the HL-2M existing system. The proposed PCS is deployed on a Linux cluster with two servers, one is the non-real time server for message and waveform, the other is the real-time server with a D-TACQ196 DAQ card and two reflection memory(RFM) cards. The real-time operating system has been upgraded and optimized to improve real-time performance. Millions of testing results indicate that the jitter time of the system is less than $6\mu s$, which satisfies the system real-time requirement. In addition, EPICS has been introduced for message synchronization with Central Control System and other inherent systems. Meanwhile, RFM devices have been used to transfer real-time data in each control cycle. To extend the channel of data acquisition, D-TACQ2106 has been integrated into the new system. The initial system involves basic control algorithms (e.l., coil current control for CS and PF coils, density control and corresponding failure detection). The new PCS has been preliminary verified with HL-2M history data in simulation mode. It can output control command correctly in the integrated environment.

In the next phase, more control algorithms will be integrated and more tests will be carried out to verify system reliability.Speaker: zhongmin huang (ASIPP) -

19

Use of MARTe2 to enhance the JET Real-Time Central Controller.

JET experiments include feedback control implemented via the real-time central controller (RTCC). This system is highly data driven. Controllers can be adapted and tuned during experimental sessions by expert users. The original implementation has been expanded and updated many times. For future campaigns, further growth in terms of both capacity and capability is desirable, but this is not practical within existing constraints. A new system to provide improved functionality based on use of the MARTe2 framework has been designed and prototyped. We report on the project status, including a particular focus on the quality processes that have been used to minimise deployment risks in a mature environment at a critical time. We also outline the future roadmap for developing the application and supporting ecosystem which could have benefits in other contexts.

Speaker: Mr Chris I. Stuart (United Kingdom Atomic Energy Authority) -

20

First Applications of MARTe2/MDSplus/Simulink framework for real-time control applications.

A set of MDSplus devices which abstract MARTe2 components and their communications has been developed. As these are applied to real applications, they are being refined and expanded. Generic versions of the Simulink and python GAMs have been developed obviating the need to create a new MDSplus device type for each simulink component or python routine. These provide a mechanism to quickly integrate new Simulink and python modules into control systems using the framework.

The framework has been used in the ITER Neutral Beam test facility for two applications. The first application provides the required management of acceleration grid breakdowns, consisting in switching off the grid power supplies and, after a given amount of time, driving again the power supply following a given waveform. In this case the system is driven by a 1kHz clock and receives an synchronous trigger whenever the breakdown occurs, using a DAC device to generate the required waveform in real-time. In the second application, a set of algorithms are implemented to derive online calorimetric measurements in the cooling system. The system, running at a rate of 10 Hz is driven by the reception of around 100 input signals communicated via MDSplus events and produces a similar number of output signals that are both stored in the MDSplus pulse file and again sent out via MDSplus events for online display.

At MIT a control system demonstration platform has been constructed to test and learn about the framework and real-time computing and networking platforms. This is a 10CM levitated magnet which can run the complete architecture of a distributed, multi-timescale control system, including supervisory control, alternate scenario (soft landing) and actuator sharing. This will be used both to validate the framework for use with the SPARC tokamak, and to drive the development of new features.

Speaker: Joshua Stillerman (MIT Plasma Science and Fusion Center)

-

15

-

15:00

Break

-

Database techniques for InformationConvener: Ms Mikyung Park (ITER Organization)

-

21

The metadata management system based on MongoDB for EAST experiment

EAST experimental uses MDSplus database for various data storage.The user accesses the data through the API it provides.MDSPlus stores abundant experimental data, but lacks a resource directory, which makes it difficult for users to have a quick overview of experimental data.

This paper proposes a solution to the Metadata Database based on MongoDB.Firstly, the system uses C ++ language to scan the whole database and extract and integrate all the metadata.Then, based on the basic document in the BSON format, the system uses the form of document nested document array to gather all the metadata of each shot in the same document, and a single metadata is constructed using a stream document to establish metadata database.Next,encapsulates interfaces for typical queries and cross-shot statistics, and optimizes performance based on indexes;Finally, based on SpringBoot + MyBatis, metadata front-end display service is provided to provide users with navigation service of database resources.

Through the design and implementation of the meta-database, it can help users quickly understand the general situation of database resources, and realize the efficient and simplified access to experimental data.Speaker: Feng Wang (Institute of Plasma Physics, Chinese Academy of Sciences) -

22

Implementation of data integration toolkit for ITER Physics Data Model

The key to developing integrated modeling and data analysis tools is to realize data exchange between different data sources (experimental database, IO for simulation code, etc.). A data integration toolkit (SpDB) was developed to access different data sources using a global unified schema defined by the ITER Physical Data Model. Data consists of the data schema and data format. The definition of data schema will change frequently as requirements are updated. However, the format of the data source will always remain stable. In the implementation of SpDB, data format conversion and data schema mapping are separated. Therefore, it not only maintains a relatively stable API with the data source but also adapts to the frequent changes in the data model.

Speaker: Ms XIAOJUAN LIU ( Institute of Plasma Physics Chinese Academy of Sciences) -

23

FAIR4Fusion - Making Fusion Data FAIR

FAIR data, that is making data findable, accessible, interoperable and reusable, is becoming

increasingly adopted across a number of disciplines for many reasons. Within the fusion

community, at least with regards to experimental data, while each site has elements of the

FAIR principles, there is a lack infrastructure providing harmonised search and access

mechanism for data at multiple sites. The goal of the FAIR4Fusion project is to develop

demonstrators to realise the benefit of a community based FAIR approach to metadata

integration and design a blueprint architecture for a fully featured service meeting all of the

gathered user requirements, plus additional requirements based on the demonstrators and

extending to not only cover experimental data, but also modelling and simulation data.

In this talk, we introduce the FAIR concepts and how we anticipate this can be applied

across the community while still maintain each sites autonomy and existing infrastructures

and processes. We also show how this can be achieved, at least within the scope of this

project, by building upon existing work already performed by the community, reducing the

costs for implementation, and describe efforts to generalize this to improve both scalability

and performance. We also introduce the initial blueprint architecture and seek to elicit

input from the audience to provide additional requirements.Speaker: Shaun de Witt (UKAEA) -

24

Architecture for the implementation of the Fusion FAIR Data Framework

Currently, largely for historical reasons, almost all fusion experiments are using their own tools to manage and store measured and processed data as well as their own ontology. Thus, very similar functionalities (data storage, data access, data model documentation, cataloguing and browsing of metadata) are often provided differently depending on experiment. The overall objective of the Fair4Fusion project is to demonstrate the impact of making experimental data from fusion devices more easily findable and accessible. The main focus towards achieving this goal is to improve FAIRness of the fusion data to make scientific analysis interoperable across multiple fusion experiments. Fair4Fusion is proposing a blueprint report that aims for a long term architecture for Fusion Open Data Framework implementation.

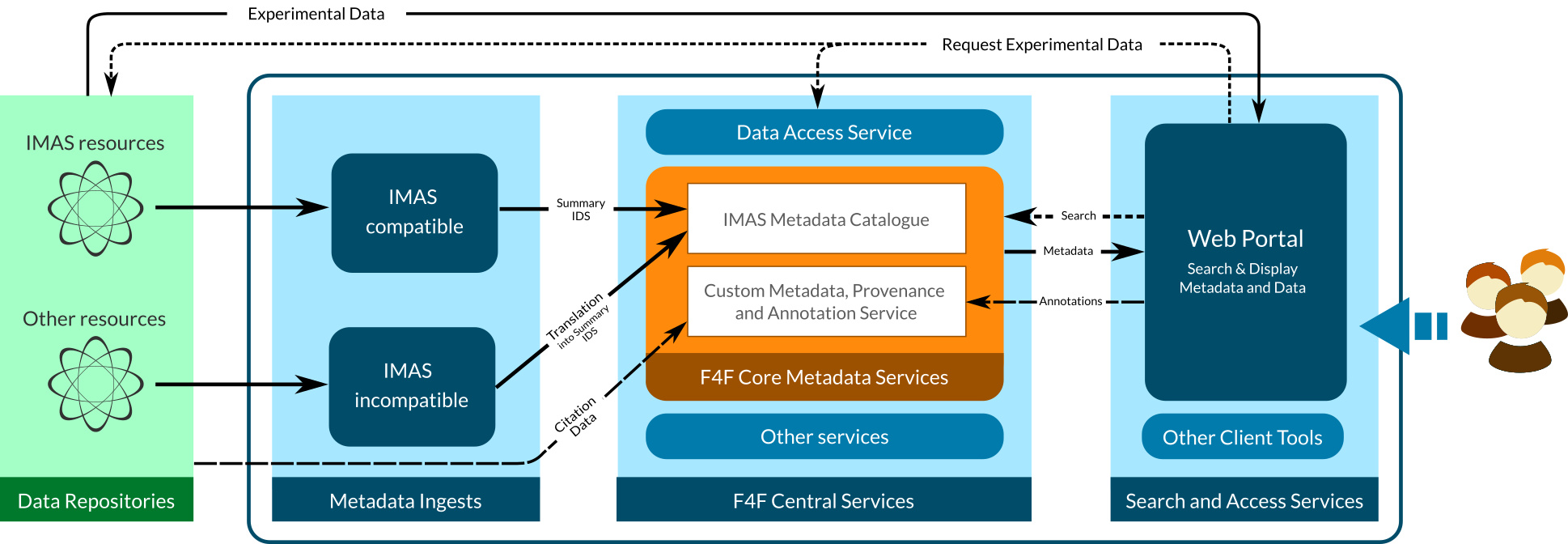

User stories about searching and accessing data and metadata, and from the perspective of data providers were collected. These use cases present the different perspectives of members of the general public, EUROfusion researchers and data providers that are the main target users of the analyzed scenarios. The basic requirements and user stories have been transformed into a list of functionalities to be fulfilled. These functionalities have been grouped in several general categories: search, visualisation and accessing outputs, reports, user annotation, metadata management, subscriptions and notifications, versioning and provenance, authentication, authorization, accounting, licensing and are related with different FAIR aspects. The collection of requirements and functionalities has been used as a basis for the iterative process of architecture design. We are assuming the use of the ITER Integrated Modelling & Analysis Suite (IMAS) Data Dictionary as a standard ontology for making data and metadata interoperable across the various EU experiments. The resulting architecture of the system consists of 3 main building blocks, namely Metadata Ingests, Central Fair4Fusion Services and Search and Access Services. In the figure we present a simplified version of this high level architecture.

Metadata Ingests are the entry point to the system for the metadata produced by experiments. In the proposed design, Metadata Ingests stay within the administration of particular experiments, thus the experiments themselves can filter or amend data before they decide to expose it to the rest of the system. From Metadata Ingests the metadata is transferred to the next block of the system, i.e. Central Fair4Fusion Services. The Core Metadata Services, being the heart of this block and the entire system in general, operate on the IMAS data format, but thanks to the translation components can accept different formats of metadata as input. Central Fair4Fusion Services provide supplementary functionality for specification of data that is not strictly tied to experiments, such as user-level annotations or citations. The last main block of the system is a set of Search and Access Services. It contains all the user-oriented client tools that integrate with the Central Fair4Fusion Services. At this level of the system, key importance is given to the Web Portal that is expected to offer an extensive set of functionalities for searching, filtering or displaying metadata and data managed within the system.Speaker: Marcin Plociennik (PSNC) -

25

Exposing and facilitating the searching of fusion data using an Open API-based data acquisition system supporting multiple data-access methods

Fusion-related experiments (WEST, MAST, JET, etc...) produce large amounts of data. In the future, we can expect ITER to produce an equally large amount of raw data coming from each and every shot.

Even though (fusion community as a whole) collects large sets of data, scientists suffer from a lack of an amalgamated shot catalogue and different access requirements. At the moment, finding data for a given shot requires having access to all sites (where experiments are run), being able to use different data formats (HDF5, MDSPlus, raw data), and having access to storage locations where databases are kept. It is also not possible to search for given physical characteristics of data as there is no single, unified format of storage or ontology.

Catalogue QT 2 and the Fair4Fusion Dashboard aims to solve some of these issues. By utilizing the IMAS data format and storing a reduced description of experiments' results (metainformation is stored inside the so-called Summary IDS), we provide scientists with a convenient way of browsing, searching, and (in the future) obtaining experimental data. By combining information from various sources (MAST, WEST, etc...) and different acquisition techniques, UDA (Universal Data Access), MDSPlus files, text based file formats, etc..., we are able to provide users with a consistent view and search functionality of data coming from different sources. By developing and combining loosely coupled components, based on Web Services, we can present data to users not only via a dedicated user interface (Web Application created with ReactJS) but also via command line tools and Jupyter Notebook based scripts. Openly available APIs allow connections to third party applications as long as they belong to the same Federated Authentication and Authorisation Infrastructure. Thanks to moving authorization and authentication responsibilities to external Identity Providers, we were able to move user management out of the scope of the application itself. This way, we can provide multiple, independent installations of Catalogue QT 2 and benefit from the common Authentication and Authorisation Infrastructure infrastructure. Installation of Catalogue QT 2 is possible in virtually any environment - due to the fact that we provide both bare metal-based installations and Docker-based components.

This paper presents the architecture of a proposed solution, ways of combining different, loosely-coupled components coming from different projects and possible future directions of Catalogue QT 2’s evolution.

Keywords: IMAS, MDSPlus, data acquisition, fusion experiments, data analysis, web services, Docker, Authentication and Authorisation Infrastructure

Speaker: Mr Michal Owsiak (Poznan Supercomputing and Networking Center, IBCh PAS) -

26

A custom toolchain for WEST legacy LynxOS subsystems

Despite continued efforts to engineer a standardized infrastructure, through regular hardware and software upgrades and refactoring, the WEST CODAC still includes several subsystems based on Motorola PowerPC VME boards running LynxOS V3.1. There lies a major challenge for the years to come in maintaining and operating more than 10 such entities some of which, such as the poloidal field control and monitoring system (DGENE) are critical for Tokamak operation and plasma control.

Regarding software developments and deployments to these targets, a 30-year-old VME native compiler running on LynxOS was used until very recently. The latter was never upgraded to avoid instabilities and system incompatibilities on critical equipment. To suppress the looming risk of hardware malfunction, a complete replacement toolchain was designed. The proposed cross-compiler is based on QEMU to provide a virtualized emulated PowerPC environment. It also uses Debian 7 « Wheezy »which is a 32-bit Linux distribution providing PowerPC support. The gcc 2.94 compiler, last version to support LynxOS V3.1, was customized within this virtual PowerPC environment in order to cross-compile functional binaries targeting Motorola PowerPC VME boards.

The custom toolchain was qualified during the WEST C4 experimental campaign following a request to modify the code of DGENE. Subsequently, the toolchain was included within the WEST framework allowing for automatic deployments using the WEST continuous integration workflow and automatic software quality control for all legacy VME subsystems, with a clear impact on reliability and maintainability of some of the oldest systems on WEST.

Speaker: Mr Gilles CAULIER (CEA-IRFM, F-13108 Saint-Paul-lez-Durance, France)

-

21

-

16:20

Break

-

Fast Network Technology and its ApplicationConvener: Didier Mazon (CEA Cadarache)

-

27

Assessment of IEEE 1588-based timing system of the ITER Neutral Beam Test Facility

MITICA is one of the two ongoing experiments at the ITER Neutral Beam Test Facility (NBTF) located in Padova (Italy). MITICA aims to develop the full-size neutral beam injector of ITER and, as such, its Control and Data Acquisition System will adhere to ITER CODAC directives. In particular, its timing system will be based on the IEEE1588 PTPv2 protocol and will use the ITER Time Communication Network (TCN).

Following the ITER device catalog, the National Instruments PXI-6683(H) PTP timing modules will be used to generate triggers and clocks that are synchronized with a PTP grandmaster clock. Data acquisition techniques, such as lazy triggers [1], will be also used to implement also event-driven data acquisition without the need of any hardware link in addition to the Ethernet connections used to transfer data and timing synchronization.

In order to evaluate the accuracy over time that can be achieved with different network topologies and configurations, a test system has been set-up consisting of a grand master clock, two PXI-6683(H) devices and two PTP aware network switches. In particular, the impact on accuracy due to the transparent and boundary clocks configurations has been investigated. In addition, a detailed simulation of the network and the involved devices has been performed using the OMNET++ discrete event simulator. The simulation parameters include not only the network and switches configuration, but also the PID parameters used in the clock servo controllers. A comparison between simulated and measured statistics is reported, together with a discussion on the possible optimal configuration strategies.Speaker: Mr Luca Trevisan (Consorzio RFX)

-

27

-

Remote Experimentation and virtual lab 1Convener: David Schissel (General Atomics)

-

28

Approach to Remote Participation in the ITER experimental program. Experience from model of Russian Remote Participation Center.

The model of Russian Remote Participation Center (RPC) was created under the contract between Russian Federation Domestic Agency (RF DA) and ROSATOM as the prototype of full-scale Remote Participation Center for ITER experiments and for coordination activities in the field of Russian thermonuclear research.

- Investigation of the high-speed data transfer via existing public networks (reliability, speed accuracy, latency)

- Test of ITER remote participation interfaces (Unified Data Access, Data Visualization and Analysis tool, etc.)

- Local Large-capacity data storage system (storage capacity more than 10 TB and disk I/O speed 300 MB/s)

- Remote monitoring of Russian plasma diagnostics and technical systems

- Security (Access to ITER S3 zone IT infrastructure (S3 – XPOZ (External to Plant Operation Zone)) in accordance with the requirements of cyber security and IEC 62645 standard.

- Participation in ITER main control room activities (remote copy of central screens and diagnostics HMI)

- Access to experimental data

- Local data processing with integration of existing data processing software (visualization, analysis, etc.)

- Scientific data analysis remotely by ITER remote participation interfaces

In the presented report, the data transfer processes (latency, speed, stability, single and multi-stream etc.) and security issues within 2 separate L3 connection to IO over public internet exchange point and GIANT were investigated. In addition, we have tested various ITER tools for direct remote participations, such as screen sharing, data browsing etc. at the distance from RF RPC to ITER IO (about 3000 kilometers).

Experiments have shown that the most stable and flexible option for live data demonstration and creation of a control room effect is the EPICS gateway. Together with the ITER dashboard, the usage of these tools makes it possible to emulate almost any functional part of the MCR at the side of a remote participant. This approach allows us to create our own mimics and customize the CSS studio HMIs for ourselves. Today using these tools, we can integrate various systems remotely without any major restrictions.

For data mirroring tasks UDA server replication is an option. It may improve performance for usage of the data browsing tools and some other tasks with archive data. To obtain the best performance it is very important to find multithreading (multi streams) data replication solution between UDA servers.

Network setup connection strategy still under development with IO now.

Work done under contract Н.4а.241.19.18.1027 with ROSATOM and Task Agreement C45TD15FR with ITER OrganizationSpeaker: Oleg Semenov (Project Center ITER - Russian Domestic Agency) -

29

A programmable web platform for distributed data access, analysis and visualization

We introduce a novel client-server Web platform for data access, processing, analysis, and visualization. The platform was designed to simultaneously meet a set of capabilities not available in a joint way in similar software systems. The platform: (a) provides secure access to large amounts of data hosted in institutional data servers; (b) allows users to operate in any modern device (computers, tablets, even smartphones); (c) is intuitive to use and provides on-line help, making it easy to use, even for sophisticated data analysis; (d) runs in a distributed environment, profiting from remote hosting and computing power; and (e) allows integration of heterogeneous data and user provided data analysis codes in different programming languages. These requirements were inspired in the needs of users for the analysis of the massive databases of nuclear fusion devices, in particular, the ITER database.

The client runs in any HTML enabled device, under virtually any operating system, and allows the user to interactively specify a desired data flow through a series of analysis or visualization routines. This flow takes the form of a graph of nodes (or modules), each one consisting of an icon with customizable properties. Each icon encapsulates a given data processing algorithm and the whole set of icons form a ready to use library of standard data handling, analysis, and visualization routines. Users conducting the analysis use their field of expertise to design the desired combination of routines, appropriately customize their properties and, optionally, add new icons with their own code. Each icon can encapsulate routines written in one of several accepted programming languages, including Fortran, C, Matlab, Python and R.

For the execution of graphs, there is a specific server that receives a request for traversing the specified graph. Then, each graph node is executed in sequence and connects its output with the input of the next ones (the output can be used as input to several modules), as indicated by the graph. The system transparently takes care of accessing data in remote servers, checking access permissions, transferring data if necessary, running code written in different programming languages in possibly different computing facilities (potentially in parallel ways), passing data to and from the inputs and outputs of the nodes, and creating the required final output data or visualization plots. The result, also in HTML form, is returned to the client for user inspection. It should be noted that the platform has been prepared not only for the interactive execution of codes but also for batch processing.

The presentation describes the platform, its architectural design and the implementation decisions. We provide an example of use that implements the development of an adaptive disruption predictor from scratch.Speaker: Prof. Francisco Esquembre (Universidad de Murcia) -

30

Remote Operation of DIII-D Under COVID-19 Restrictions*

The DIII-D National Fusion Facility is a large international user facility with over 800 active participants. Experiments are routinely conducted throughout the year with the control room being the focus of activity. Although experiments on DIII-D have involved remote participation for decades, and even have been led by remote scientists, the physical control room always remained filled with ~40 scientists and engineers all working in close coordination. The severe limitations on control room occupancy required in response to the COVID-19 pandemic drastically reduced the number of physical occupants in the control room to the point where DIII-D operations would not have been possible without a significantly enhanced remote participation capability. Leveraging experienced gained from General Atomics operating EAST remotely from San Diego [1], the DIII-D Team was able to deploy a variety of novel computer software solutions that allowed the information that is typically displayed on large control room displays to be available to remote participants. New audio/video solutions were implemented to mimic the dynamic and ad-hoc scientific conversation that are critical in successfully operating an experimental campaign on DIII-D. Secure methodologies were put into place that allowed control of hardware to be accomplished by remote participants including DIII-D’s digital plasma control software (PCS). Enhanced software monitoring of critical infrastructure allowed the DIII-D Team to be rapidly alerted to issues that might affect operations. Existing tools were expanded and their functionality increased to satisfy new requirements imposed by the pandemic. Finally, given the mechanical and electrical complexity involved in the operation of DIII-D, no amount of software could replace the need for “hands on hardware.” A dedicated subset of the DIII-D team remained on site and closely coordinated their work with remote team members which was enhanced through extensions to the wireless network and the use of tablet computers for audio/video/screen sharing. Taken all together, the DIII-D Team has been able to conduct very successful experimental campaigns in 2020 and 2021. This presentation will review the novel computer science solutions that allowed remote operations, examine the efficiency gains and losses, and examine lessons learned informing what changes implemented as a result of the pandemic, should remain in-place post-pandemic.

[1] D.P. Schissel, et al., Nucl. Fusion 57 (2017) 056032.*This work was supported by the US Department of Energy under DE-FC02-04ER54698.

Speaker: David Schissel (General Atomics)

-

28

-

-

-

Remote Experimentation and virtual lab 2Convener: Mr Mikhail Sokolov (NRC "Kurchatov Institute")

-

31

Study on EPICS Communication over Long Distance

The operation of ITER is expected to happen not only directly in the ITER control room, but also to benefit from human capital from around the globe. Each ITER participating country could create a remote participation room and follow the progress of experiments in relatively real time. Scientists from all over the world can collaborate on experiments at the same time as they are performed. This is called “remote participation” in ITER.

ITER control system is based on EPICS. It is thus natural to try to extend EPICS use to remote participation sites. The authors designed tests to find out how EPICS performance depends on network performance, with the goal of understanding if an EPICS-based application can be used directly on the remote side. A special test suite has been developed to see how many process variables (PVs) remote participants can use if they run their local operator screens or independent applications. Remote participants in the test were connected via a dedicated VPN channel. The test exercised reading of large number of PVs – up to 10 000 – with an update frequency of up to 10 Hz. The performance was compared with equivalent execution in a local network.

With a large number of PVs and their frequent updating, the latency of updates on the side of the remote participant, adjusted to the static network delay due to distance, was demonstrated to be comparable to the latency of local execution. This suggests that EPICS over long distance is quite usable for the purpose of ITER remotes participation tasks.Speaker: Mr Leonid Lobes (Tomsk Polytechnic University) -

32

Remote Participation in ITER Systems Commissioning

ITER is now in full construction phase, with many plant systems being installed and commissioned. Plant service systems – electricity, liquid and gas supplies, water cooling, building monitoring – are being gradually commissioned and handed over for operation. Other systems, such as plasma diagnostics, are being developed and tested on site or at the ITER parties, to be installed later during machine assembly. Remote participation function of the ITER control system has been always oriented towards plasma operation phase, not specifically addressing systems’ commissioning phase. As the systems are often procured by the ITER parties, recently there has been significant interest from the suppliers to follow up the commissioning activities as well. This interest was further multiplied by recent limitations on work force travel. Consequently, some remote participation elements under development had to be put in place or adapted ahead of time.

This contribution gives a summary of the status of the remote participation design at ITER, and illustrates several particular use cases. From architecture point of view, implications of remote participation on a control system network and services design are discussed, and different ways of remote connection are explored. From the point of view of plant systems, a remote follow up of a “slow” electricity supply system producing repetitive data readings is illustrated, as well as a follow up of a “fast” diagnostic system producing scientific data in test mode. From the operational mode point of view, mostly systems with read-only follow up are discussed, but also the approach to interactive participation in system tuning is explained.

Speaker: Mr Denis Stepanov (ITER Organization) -

33

Lessons learned for remote operation on ASDEX Upgrade during the Covid-19 pandemic

With the outbreak of the Covid-19 pandemic in spring 2020, experimental operations at ASDEX Upgrade came to a temporary halt, as for health reasons and due to regulations, many of the people necessary for operations and the scientific programme could not enter the institute premises, and only a very small number of people were allowed to enter the control room itself.

However, thanks to the use and optimisation of various existing and new tools, experimental operations at ASDEX Upgrade could be resumed after a short time with almost no restrictions. The programme planned for 2020, both by internal proponents and with the participation of partners from EUROfusion and other international associations, was almost fully completed. Likewise, the planning and implementation of the current 2021 campaign could continue as usual.

This contribution presents the tools provided by CODAC to enable and facilitate the smooth running of ASDEX Upgrade with a minimum number of people present on site, especially with regard to the planning, preparation and execution of the experiments as well as their evaluation and the scientific review of the results. Further possibilities for improvement are also discussed.

Several of the tools applied not only enable experiments to be conducted with remote participation, but also have the capacity to promote the efficiency of experiment operation. It is therefore likely that they will remain in use after the end of the pandemic. Furthermore, the experience gained here can contribute to the discussion of what efficient remote participation might look like in other experiments such as JT-60SA or ITER.

Speaker: J. Christoph Fuchs (Max-Planck-Institut für Plasmaphysik) -

34

JET operations under Covid-19 restrictions

During March 2020 it became obvious that the Covid-19 infection rates were accelerating in the UK and that we would be heading for a National lockdown. It was decided to put JET into a safe state. The site was then shut down, all but for a skeleton staff, there to ensure essential safety and security, with everyone else working from home. Over the next couple of months arrangements were made to bring maintenance teams back on site to ensure the integrity of the JET plant so that it could be re-started when conditions permitted. Plans were prepared to limit operational staff in the JET Control Room and surrounding areas to allow a return to work while ensuring Covid-19 distancing. A major refurbishment of the Control Room HVAC system had already been planned but pending completion of this the maximum number of people in the area was limited to 10, all required to wear face coverings. In order to reduce the number of people in the Control Room from the usual 20 – 30, workstations had to be re-located to a meeting room in the same building involving considerable re-cabling and extending the JET operational networks beyond their usual areas. In addition, arrangements were made for many roles to be executed from offices or even off-site using our existing remote access system. The number of video conference channels (Zoom rooms) dedicated to operations was increased from 1 to 3 and then 4 to enable communications between the Control Room staff and remote operators. This was supplemented with MS Teams for more ad-hoc communications. Our operations and plant mimics, an in-house development based on Oracle/Solaris, were web enabled and made accessible from the office network and our real time plasma operations camera system was augmented to provide web based streaming video (inc. the live Torus Hall audio). Work is also ongoing to convert many of our paper-based forms for approval of operational exceptions work in controlled plant areas to integrated computer-based workflows. All these measures have proved to be very successful and enabled us to restart JET operations. In several cases this has made operations more effective as it gives remote experts easier access to operational information, and control room staff easier access to remote experts. Initially operations commenced in Deuterium (2H) plasmas and have now moved on to 100% Tritium plasmas. We are now preparing to an increased return to site together with allowing increased numbers of people back into the Control Room following completion of the HVAC refurbishment, ready for DT plasmas later in the year.

Speaker: Dr John Waterhouse (UKAEA) -

35

Current developments on ASDEX Upgrade data acquisition systems

The ASDEX Upgrade diagnostics have provided scientists with the experimental data required to advance the fusion field for 30 years. In this time, the systems and diagnostics of the machine have evolved. Many solutions combined commercial products with in-house productions of state-of-the-art data-acquisition hardware. However, with the ever-increasing advances of the computing industry,and the long-run of the fusion machines it is not uncommon to find dated systems working with more modern systems. At some point a line has to be drawn and systems updated to support newer architectures, which provide access to more modern tools. Nevertheless, simply re-writing otherwise functional programs is not always feasible or effective. For the ASDEX Upgrade diagnostics the time has come to undo this entanglement and draw a clear strategy for modern data acquisition systems. The Discharge Control System (DCS) team at ASDEX Upgrade has already advanced on this work by providing clear development and integration pipelines to some of the ASDEX Upgrade diagnostics, but to supply current diagnostics with modern DAQ systems (in the order of 100’s) more robust tools were required. We introduce new data acquisition plan using standardization layers based on the ITER Nominal Device Support (NDS v3). These frameworks are often used to plan highly modular and maintainable systems looking at the future, but in this case, these same traits help modularize and integrate existing systems. New diagnostics access modernized systems integrated using NDSv3, meanwhile old diagnostics, that may be replaced in a more staggered manner, benefit from the new systems adopting a simple communication layer or a C++ wrapper on the otherwise perfectly functional C driver of the old diagnostic. The new communication interfaces are standard and re-used by any NDS driver, saving time and also allowing for future developments of drivers to connect both to the real-time or standard ASDEX Upgrade diagnostics networks. The work presents the status as well as plans for the fully deployed new diagnostics, together with the development, test, and deployment strategies, bringing ASDEX Upgrade diagnostics to the most modern standards. A prototype system was built with the mentioned technologies and using CentOS and preliminary conclusions are presented.

Speaker: Mr Miguel Astrain (Max-Planck-Institut für Plasmaphysik (IPP)) -

36

A Plant System Configuration Tool based on MDSplus and EPICS for the ITER Neutral Beam Test Facility experiment SPIDER

The SPIDER experiment (Source for the Production of Ions of Deuterium Extracted from a Radio frequency plasma) is a prototype devoted to the heating and diagnostic neutral beam studies in operation at the ITER Neutral Beam Test Facility (NBTF) at Consorzio RFX, Padova. SPIDER is the full-size ITER ion source prototype and the largest negative ion source in operation in the world. In view of ITER heating requirements to realize plasma burning conditions and instabilities control, SPIDER aims at achieving long-time operation (3600 s) with beam energy up to 100 keV, high extracted current density (above 355 A.m-2 for H- and above 285 A.m-2 for D-) at maximum beam source pressure of 0.3 Pa. Moreover, the maximum deviation from uniformity must be kept under 10%.[1]

The SPIDER pulse preparation follows a strict procedure of approval of the operation parameters, in view of safety, machine protection and efficiency. In a simplified description, the session leader (SL) defines the parameters according with the best implementation of the science program. The technical responsible (RT) verifies all parameters for approval and only after agreeing with the setup, sends the configuration to the technical operator (OT) to load the configuration into SPIDER instrumentation.

The current tools used in SPIDER integrated commissioning and initial SPIDER campaign [2] permit the SL to design a new pulse and program the set of parameters directly into a temporary MDSplus pulse file. This information is then passed to the RT for approval. The same pulse file can be visualised by the RT but there is no indication of what parameters were changed since the previous pulse, or from a pre-set pulse file taken from a previous run. In consequence, the RT must go through a tedious and error prone procedure of checking all the parameters, even if the set of parameters have already been approved for a previous pulse. Moreover, the current set of tools does not foresee an automated load of previously set configurations, except for the possibility of using a command line to load previous setups from an executed or reference pulse.

Aiming at the automated implementation of rules and procedures, as well as improving the usability, safety and interoperability between the SL and RT, a new configuration tool for setting the SPIDER pulse parameters is under development, using the integration of two relevant tools in the fusion I&C community: MDSplus and Epics.

This contribution will emphasize on (i) the present solution that has been used during initial SPIDER campaigns ; (ii) the requirements of the configuration tool according to the set of procedures to be implemented in the SPIDER pulse preparation; (iii) the set of development tools available for implementing the necessary application(s); (iv) the design options and application architecture; (v) the implementation details and preliminary tests of the alpha release application.

[1] V. Toigo et al 2019 Nucl. Fusion 59 086058

[2] A. Luchetta et al 2019 Fusion Engineering and Design, Volume 146, Part A, 500-504Speaker: Nuno Cruz (Instituto de Plasmas e Fusão Nuclear, Instituto Superior Técnico, Universidade de Lisboa, 1049-001, Lisboa, Portugal)

-

31

-

15:00

Break

-

Data Acquisition and signal processing 1Convener: Dr Hideya Nakanishi (National Institute for Fusion Science)

-

37

Fast optimization of the central electron and ion temperature on ASDEX Upgrade based on Iterative Learning Control

Tokamak scenarios are governed by actuator actions that can be either pre-programmed in feedforward or requested by feedback controllers based on actual plasma state. Actions requested by feedback controller have the advantage that they can react on unpredictable events that happen in the system. On the other hand, the reaction comes always with a delay. For that reason, it is required to prepare the feedforward trajectories such that they bring the system as close as possible to the desired state and feedback controllers provide correction of disturbances.